What are the methods of data transfer and how to choose the one that is ideal for your needs.

In the life of every computer user, sooner or later there comes a moment when ... you need to transfer some files to another user. If this is your colleague or neighbor in the stairwell, then the easiest way is to write the data to a disk or flash drive, and hand it over from hand to hand. However, you may need to transfer data to another city or even to another country...

Direct File Transfer Methods

Let's start with the fact that files can be transferred both directly and through third-party services. There are not so many ways to directly transfer files, so I think it's most logical to deal with them right away.

The main advantage of direct data transfer between computers is speed and almost complete independence from third-party services. If you need, for example, to send text file, a screenshot or just a funny picture, then the direct transmission will be the most fast way. Also, if you are confident in the stability of your Internet connection, you can transfer large files at maximum speed this way.

According to the principle of using (or not using) third-party programs and services, several subtypes of direct data transfer can be distinguished:

- Transfer over local networks. This method is the only truly autonomous one. That is, when choosing it, you do not need to use any third party programs or services. However, for its implementation, it requires the presence of these very local networks.

Local networks are both corporate (unite computers of one organization) and public (for example, a city local area network). Actually, the range of file transfer is just limited by the scope of the network and this is the only drawback of this method.

You can read about how to set up and work with a local network.

- Transfer through clients for communication. This method is the second most popular and easy to implement. If you use to communicate on the Internet not only social media, but also special programs for instant messaging or video conferencing, then you have the opportunity to use them to organize a direct file transfer gateway.

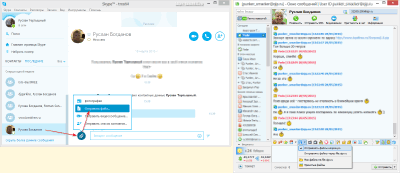

Almost any such application has additional buttons that provide the functionality we need. In , for example, direct data transfer is called by pressing the button with the paperclip icon (to the left of the message input field) and activating the "Send file" item. And in QIP there is a button for this with a floppy disk icon and the option "Send files directly" (the only limitation is that your recipient must also have Qwip):

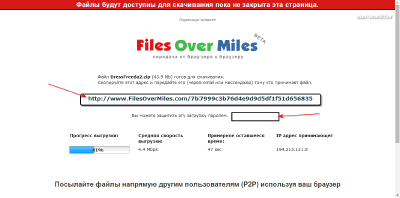

- Transfer via online gateways. This method is to use the capabilities of direct HTTP tunneling through intermediate servers of various services. Let's illustrate how everything works on the example of the FilesOverMiles.com service.

The essence of its work is very simple: you select a file of at least 1 megabyte on your computer that you need to transfer and get a unique link, by clicking on which, the recipient will be able to join your distribution. The tab with the created link cannot be closed until your file is downloaded completely, otherwise the transfer will be interrupted:

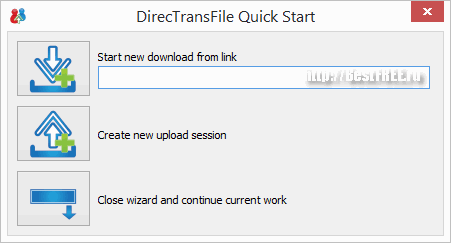

- Transfer with the help of special programs. There are also special programs for organizing direct gateways for transferring files from computer to computer. One of the simplest and smallest is DirecTransFile .

According to the principle of its work, it resembles the online gateway services described above, only, unlike them, it does not require us to visit any sites, and also supports downloading files and saving distributions.

The program has an English-language interface, but has a fairly simple wizard that contains only three buttons: "Download", "Create transfer" and "Minimize to tray". To transfer a file, we need to click the second button "Create new upload session", then specify the file or folder that needs to be sent and click the "Register" button to get a unique link to our distribution.

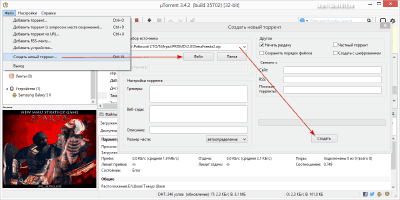

- Transfer via torrents. This method is essentially combined and is not completely independent. However, it is often used to convey large files through the peer-to-peer Bit-Torrent network.

Its principle is to create a small torrent file, transfer given file to the recipient and then downloading the desired big file. In the popular uTorrent client, the process of creating a distribution looks something like this: in the "File" menu, click "Create a new torrent" and in the window that opens, specify the path to the file or folder that we want to distribute. We check that the list of trackers is empty and click the "Create" button. When asked if we want to create a file without being tied to a tracker, we answer "Yes" and that's it - we can send our torrent to the recipient.

Indirect file transfer

Direct data transfer is good: both the speed is high, and the level of security, and there are no limits. However, there is one drawback in direct file transfer: while downloading the file, your computer must be turned on. If your recipient or you have too low an Internet connection speed, then such distribution may take a day or more (depending on the file size)!

Therefore, in some cases it is more convenient to use the services of third-party services to host your data and open access to it. Unlike direct transfer, here you first fully upload your file to the selected file storage, and then activate a public link for it, through which the recipient can download it.

The advantage of this file sharing method is that you do not need to keep your computer on all the time - all uploaded data is available online. However, there are nuances here too. For example, a service may have a maximum file size limit (usually 1 to 10 gigabytes) or a limited data retention period. I propose to consider all modern options for indirect file exchange:

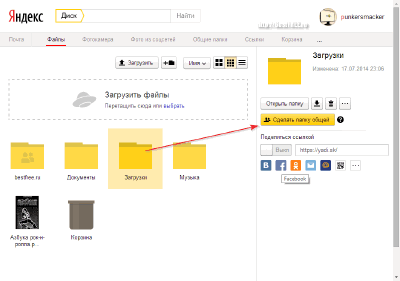

- Usage . This is perhaps one of the most modern and convenient file sharing options, which allows you to quite flexibly manage your online file storage and access levels to it.

There are quite a lot of online disks today, however, in my opinion, everything is most conveniently implemented on the service. There are no restrictions on the size of uploaded files, 10 gigabytes of space are given (with the ability to increase to 20 for free by inviting friends), and there is also a very convenient access control system to separate folders and files.

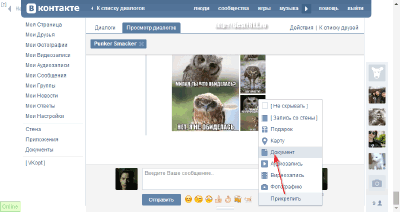

- Data transmission via social networks. If you have a VKontakte, Facebook or Mail.Ru account, then you can send small files attached directly to messages.

In this regard, the Contact is the best. It allows you to attach files up to 200 megabytes, while for Facebook and My World from Mail.Ru, the upper bar is only 25 megabytes. However, in the case of Mail, everything is not so simple ... The fact is that files that exceed the prescribed volume are automatically offered to be uploaded to the "Mail.Ru Cloud", which is not as convenient as the same Yandex.Disk, but allows you to get free up to 100 gigabytes of free space!

The file uploaded in this way to the "Cloud" will be attached to the message as a link. However, its size should not exceed 2 GB. Otherwise, it will have to be "broken" with file manager, and then with his own help collect from the recipient on the PC after he downloads all the parts.

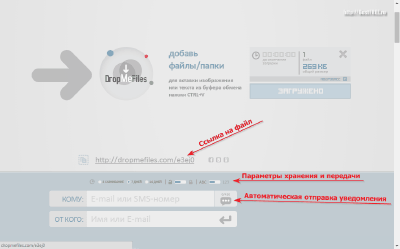

- Data transfer via temporary download services. Another way that has existed for about 5-6 years is to transfer data through special services for a one-time download. Their essence boils down to the fact that the file you downloaded is automatically deleted either immediately after the recipient has downloaded it, or after a couple of days.

In my opinion, one of the best Russian-language services of this kind is DropMeFiles.com. You can upload your data up to 50 gigabytes here without registration, and your files will be stored here for up to 14 days!

Upon completion of uploading the file to the server, you will be given a link to it, which you can immediately send via e-mail or SMS message. Also pay attention to the storage and processing options for your file. By default, it is stored for 7 days, however, you can make the link one-time, or extend it up to 2 weeks. Optionally, it is possible to protect the file with a password and transform the link into a set of numbers to simplify downloading the file using SmartTV.

There is also another way - data transfer through the so-called file hosting. These are services that offer to download a file either for money without restrictions, or for free, but rather slowly (at about 20 - 128 kbps). I think he (like some others) is not worthy of our attention due to the inconvenience for the recipient, but I simply could not not mention him :).

Building your own server

We have considered almost all options for free file sharing, but there is one more that we have not mentioned yet. To transfer files, you can use not only "foreign" services, but also your own full-fledged server!

The easiest way to get a server at your disposal is to buy a domain on the Internet and attach hosting to it. On your hosting you can organize at least your own website, at least file storage, however, for some, this option can be very expensive...

If you don't want to pay for a full-fledged hosting, but you have a desire to have your own server, you can go the other way - turn your own PC into a server!

In order to turn a computer into a server, you will need not so much: unlimited access to the Internet (and many people already have it) and a static IP address (my provider provides this service for only an additional 10 hryvnia per month, which is approximately 24 rubles at the current exchange rate).

If you already have unlimited and an address, it remains to decide on the software that you will use to implement your ideas. Since in the context of this article we want to create just a file server (with a small load and without a website on it), then the configuration of a regular office PC will be enough for us and we don’t even have to install a special server operating system!

It remains only to decide on the protocol for transferring our files. For a regular file server, I recommend using the . And you can implement it using the FileZilla Server program.

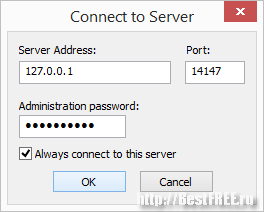

When installing the server, you can not change anything in its settings. Upon completion of the installation, you will see a dialog for entering the control panel:

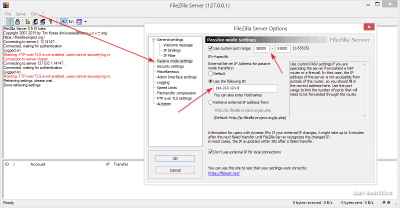

At the first start, you need to specify the local address of the server (by default 127.0.0.1), port (you can also leave it alone) and the administrator password, then click "Ok" and we will get to the server control panel. The first step is to set it up. In my case, the computer connection goes directly, but through a router, so the active mode of the server is not available to me. Consider the passive settings. To do this, go to the "Edit" menu, call the "Settings" item and in the window that opens, go to the "Passive mode settings" section:

Here we need to specify the range of working ports for accessing the server and our external IP address, by which we will access our server from remote computers.

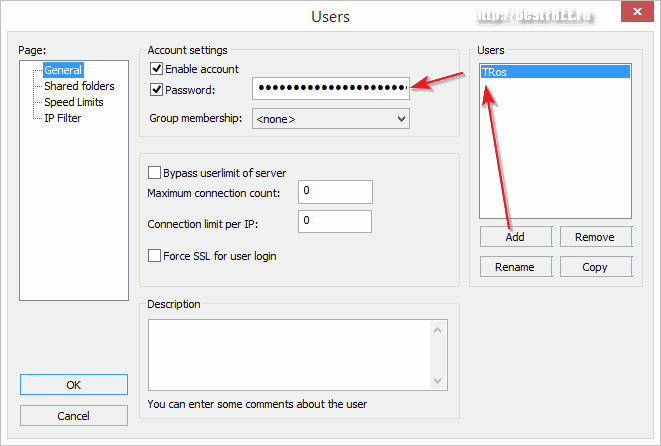

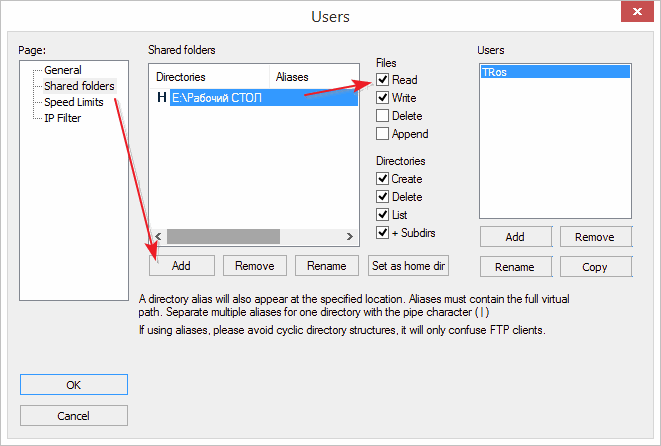

Close the settings and again go to the "Edit" menu, but this time we enter the "Users" section:

On the first tab ("General"), we need to click the "Add" button, and then set the new user name and password. When the created user appears in the list, go to the next tab ("Shared folders") and add to the list the folders that will be available to us via FTP, and to the right of the list of folders we configure their permissions (by default they are not writable, because the " write"):

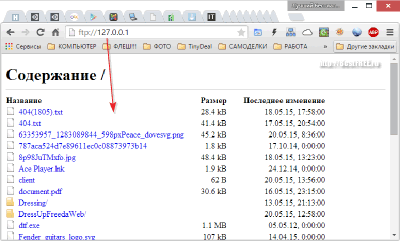

If you did everything right, then you can test the performance of your server. To do this, if you want to view files from a local PC, enter the address ftp://127.0.0.1 (or your external IP if you need access from a third-party computer) into the address bar of your browser and press Enter. You should be prompted for a name and password, after which a list of files in the folders you have opened for access will be displayed:

Above, only the general principles of setting up the server were described, however, some points may differ for you. To configure everything correctly, I advise you to study.

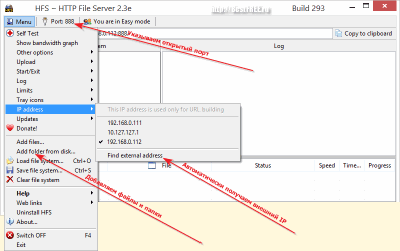

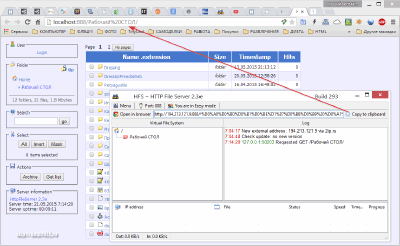

Alternatively, you can create not an FTP, but an HTTP server. The easiest way to do this is with the HFS portable program. If you have access to the Internet through a router, then in order to use this program, you will need to open a specific port in it (how to do this for your router model, you can find it on Google) and register it in a special field of the program.

The second step on the way to setting up the server is to specify external IP address. HFS allows you to get it automatically. To do this, go to the "Menu" menu, highlight the "IP address" section and click on the bottom item - "Find external address". If everything is fine, then external address should be defined and appear in the top address bar instead of the current local.

Now we only need to specify the files and folders that will be available through our server. To do this, go to the "Menu" again and select the item "Add files" or "Add folder from disk", depending on what we want to share.

Now, in order to connect to the created server, we need to specify its address in the browser only without specifying the protocol, as we did for the FTP connection. For more convenience, HFS automatically generates a link to itself and it can be copied to the clipboard by clicking the appropriate "Copy to clipboard" button:

findings

Summarizing all of the above, we can say that today there are many ways to transfer files from computer to computer. The choice of a specific transmission option depends only on the user's preferences and the amount of data being transferred.

If you plan to frequently exchange files directly with another person, you may be advised to use special programs such as the one discussed by DirecTransFile. If you need to transfer a large amount of information at once, I recommend using the services of online instant data transfer services. And, if you often have to transfer the same files, it may make sense to think about creating your own file server.

Successful file sharing and high data transfer rates!

P.S. It is allowed to freely copy and quote this article, provided that an open active link to the source is indicated and the authorship of Ruslan Tertyshny is preserved.

Introduction

The complex nature and dynamism of modern world economic relations has led to the need to create new telecommunication technologies that generate new services and, accordingly, an increasing need for them.

The volume and methods of informing specialists with the help of computer communications have changed radically in recent years. And if earlier such tools were intended only for a narrow circle of specialists and experienced users, now they are designed for the widest audience.

Currently, data transmission using computers, the use of local and global computer networks is becoming as common as the computers themselves.

The purpose of this manual is to prepare students for the skillful use of local and global computer networks, communication equipment and software tools.

After completing the study of the presented section, students should be able to navigate among the rich variety of proposed equipment and programs, the principles of functioning of local area networks, network protocols and the basics of Internet technologies.

The presentation of the material in all chapters is based on many examples. After each chapter, there are control questions to consolidate the studied theoretical material, tests for self-control, as well as a list of recommended literature for studying the course.

1 General information about computer networks 1.1 Purpose of computer networks

Computing networks (CN) appeared a long time ago. Since the dawn of computers, there have been huge systems known as time-sharing systems. They allowed the central computer to be used with remote terminals. Such a terminal consisted of a display and a keyboard. Outwardly, it looked like an ordinary personal computer, but did not have its own processor unit. Using such terminals, hundreds and sometimes thousands of employees had access to the central computer.

This mode was provided due to the fact that the time-sharing system divided the operating time of the central computer into short time intervals, distributing them among users. At the same time, the illusion of simultaneous use of the central computer by many employees was created.

In the 1970s, mainframe computers gave way to minicomputer systems using the same time-sharing regime. But technology has evolved, and since the late 70s, personal computers have appeared in the workplace. However, stand-alone personal computers do not provide direct access to the entire organization's data and do not allow sharing of programs and equipment.

From this moment begins the modern development of computer networks.

Computing network called a system consisting of two or more remote computers connected using special equipment and interacting with each other via data transmission channels.

The simplest network (network) consists of several personal computers interconnected by a network cable (Figure 1). At the same time, a special network adapter card (NIC) is installed in each computer, which communicates between the computer's system bus and the network cable /1/.

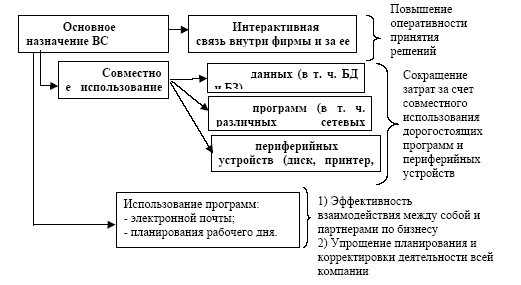

In addition, all computer networks operate under the control of a special network operating system (NOS - Network Operation System). The main purpose of computer networks is the sharing of resources and the implementation of interactive communication both within one company and

outside of it (Figure 2).

Figure 2 - Purpose of the computer network

Resources - are data (including corporate databases and knowledge), applications (including various network programs), as well as peripheral devices such as printer, scanner, modem, etc.

Prior to networking a personal computer, each user had to have their own printer, plotter, and other peripheral devices, and the same software used by a group of users had to be installed on each of the computers.

Another attractive side of the network is the availability of e-mail programs and business scheduling. Thanks to them, employees effectively interact with each other and business partners, and planning and adjusting the activities of the entire company is much easier. The use of computer networks allows: a) to increase the efficiency of the company's personnel; b) reduce costs by sharing data, expensive peripherals and software (applications).

The main characteristics of a computer network are:

operational capabilities of the network;

temporal characteristics;

reliability;

performance;

The operational capabilities of the network are characterized by such conditions,

Providing access to application software, database,

BZ, etc.;

remote input of tasks;

file transfer between network nodes;

access to remote files;

issuance of certificates of information and software resources;

distributed data processing on several computers, etc. The temporal characteristics of the network determine the duration of servicing user requests:

average access time, which depends on the size of the network, the remoteness of users, the load and bandwidth of communication channels, etc.;

average service time.

Reliability characterize the reliability of both individual network elements and the network as a whole.

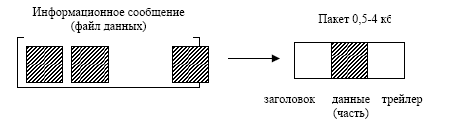

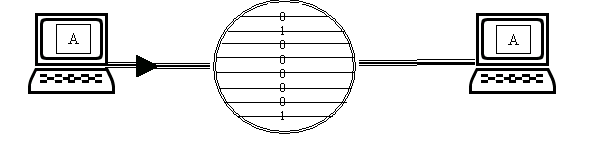

Packet as the basic unit of information in computer networks. When exchanging data between personal computers, any information message is divided by data transfer programs into small data blocks, which are called packages(Figure 3).

Figure 3 - Information message

This is due to the fact that the data is usually contained in large files, and if the transmitting computer sends it in its entirety, it will fill the communication channel for a long time and “link” the entire network, that is, it will interfere with the interaction of other network participants. In addition, the occurrence of errors during the transmission of large blocks will cause more time than its retransmission.

A packet is the basic unit of information in computer networks. When data is divided into packets, their transmission speed increases so much that each computer on the network is able to receive and transmit data almost simultaneously with the rest of the PC /2/.

When splitting data into packets, the network operating system adds special adding information to the actual transmitted data:

a header that indicates the sender's address, as well as information on the collection of data blocks in the original information message when they are received by the recipient;

a trailer that contains information to check the error-free transmission of the package. When an error is detected, the packet is transmitted

should repeat.

Ways to organize data transfer between personal computers. Data transfer between computers and other devices occurs in parallel or sequentially.

So most personal computers use the parallel port to work with the printer. The term "parallel" means that data is transmitted simultaneously over several wires.

To send a byte of data across a parallel connection, the computer simultaneously sets up the entire byte on eight wires. The parallel connection scheme can be illustrated in Figure 4.

Figure 4 - Parallel connection

As can be seen from the figure, a parallel connection over eight wires allows you to transfer a byte of data at the same time.

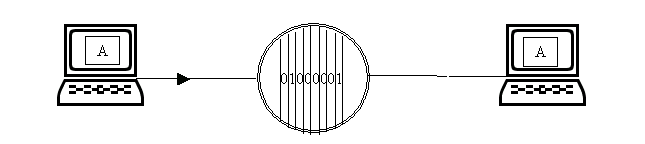

In contrast, a serial connection involves the transfer of data in turn, bit by bit. In networks, this way of working is most often used, when bits line up one after another and are sequentially transmitted (and received too), which is illustrated in Figure 5.

Figure 5 - Serial connection

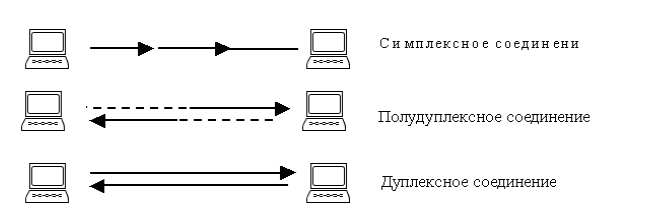

When connecting over network links, three different methods are used. The connection is: simplex, half-duplex and duplex.

O simplex connection say when the data moves in only one direction (Figure 6). Half duplex connection allows data to move in both directions, but at different times.

And finally duplex connection allows data to move in both directions at the same time.

Figure 6 - Connection types

Client-Server Architecture

computer network(computer network, or computer network- VS) is a set of computers and terminals connected via communication channels into a single system that meets the requirements of distributed data processing, sharing of common information and computing resources.

There is often confusion between distributed systems and computer networks. When working with a distributed system, the user may not have the slightest idea on what processors, where, using what specific physical resources his program will be executed. On a network, since all the machines there are autonomous, the user must do everything explicitly. The main difference between these systems lies in the organization of their software. And there and there is the transfer of information. In a network - a user, in a distributed system - a system.

Distributed computing in computer networks is based on the "client-server" architecture, which has become the dominant method of data processing. The terms "client" and "server" refer to the roles played by various components in a distributed computing environment. The "client" and "server" components do not have to run on different machines, although they usually do - the client application is located on the user's workstation, and the server is on a special dedicated machine. The most common types of servers are file servers, database servers, print servers, e-mail servers, Web servers, and others. Recently, multifunctional application servers have been intensively introduced.

The client forms a request to the server to perform the appropriate functions. For example, a file server provides storage of public data, organizes access to it and transfers data to the client. Data processing is distributed in one ratio or another between the server and the client. More recently, the proportion of processing per client has come to be referred to as the "thickness" of the client.

The development of the "client-server" architecture takes place in a spiral and in

Currently, there is a trend towards centralization of computing, that is, the replacement of "thick" clients - workstations based on high-performance PCs equipped with powerful software to support applications, multimedia, navigation and graphical interfaces - "thin" clients. The historical forerunners of "thin" clients were alphanumeric terminals that connected to host computers, or mainframes (mainframe) through specialized interfaces or universal serial ports.

Mainframes are a classic example of the centralization of computing, since all computing resources, storage and processing of huge amounts of data were concentrated in a single complex. The main advantages of a centralized architecture are ease of administration and information protection. All terminals were of the same type - therefore, the devices at the user's workplaces behaved predictably and could be replaced at any time, the costs of servicing the terminals and communication lines were also easily predictable /3/.

With the advent of personal computers, it has become possible to have computing and information resources on the user's desktop and manage them at their own discretion using a color windowed graphical interface. The increase in PC performance made it possible to transfer parts of the system (user interface, application logic) to run on a personal computer, directly at the workplace, and leave the data processing functions on the central computer. The system has become distributed - one part of the functions is performed on the central computer, the other - on a personal computer, which is connected to the central one through a communication network. Thus, a client-server model of interaction between computers and programs on the network appeared, and on this basis, application development tools for the implementation of information systems began to develop.

However, the two-tier client-server architecture has such significant disadvantages as administrative complexity and low information security, which are especially noticeable when compared with the centralized mainframe architecture (Table 1).

Table 1 — Comparison between centralized mainframe architecture and two-tier client-server architecture

|

Centralized Mainframe Architecture |

Two-tier client-server architecture |

|

All information system on the central computer |

A system consisting of a large number of heterogeneous computers running heterogeneous applications is difficult to administer |

|

At the workplace, simple access devices, enabling the user to manage processes in information system |

Computers are difficult to configure and troubleshoot, maintenance costs are high |

|

The access device communicates with the central computer through a simple, hardware-implemented protocol. |

The computer is highly vulnerable to viruses and unauthorized access |

Classification of computer networks

To date, there is no generally accepted classification of networks. There are two generally accepted factors to distinguish between them: transmission technology and scale.

There are two main types of transmission technologies used in networks:

broadcasting (from one to many);

dot-dot.

Broadcast networks have a single data transmission channel that is used by all network machines. A packet sent by a machine is received by all other machines on the network. The address of the recipient is specified in a certain field of the packet. Each machine checks this field and if it finds its own address in this field, it proceeds to process this packet; if this field does not contain her address, then she simply ignores this packet.

Broadcast networks typically have a mode where one packet is addressed to all machines on the network. This is the so-called broadcast mode. There is a group broadcasting mode in such networks - the same packet is received by machines belonging to a certain group in the network.

Point-to-point networks connect each pair of machines with an individual channel. Therefore, before the packet reaches the destination, it passes through several intermediate machines. In these networks, there is a need for routing. The speed of message delivery and load distribution in the network depend on its effectiveness.

Broadcast type networks are usually used in geographically small areas. Point-to-point networks - for building large networks covering large regions /4/.

Network scale is another criterion for classifying networks. The length of communication provided by a computer network can be different: within the same premises, building, enterprise, region, continent or the whole world.

To local networks -Local area Networks (LAN) - refers to computer networks concentrated in a small area (usually within a radius of no more than 1-2 km). In general, a local area network is a communication system owned by one organization. Due to the short distances in local networks, it is possible to use relatively expensive high-quality communication lines, which allow, using simple data transmission methods, to achieve high data exchange rates of the order of 100 Mbps. In this regard, the services provided by local networks are very diverse and usually involve on-line implementation.

Global networks -Wide area Networks (WAN) - combine geographically dispersed computers that can be located in different cities and countries. Since laying high-quality communication lines over long distances is very expensive, WANs often use existing communication lines that were originally intended for completely different purposes. For example, many global networks are built on the basis of general-purpose telephone and telegraph channels. Due to the low speeds of such communication lines in global networks (tens of kilobits per second), the set of services provided is usually limited to file transfer, mainly not online, but in the background, using e-mail. For the stable transmission of discrete data over low-quality communication lines, methods and equipment are used that differ significantly from the methods and equipment typical for

local networks. As a rule, complex data control and recovery procedures are used here, since the most typical mode of data transmission over a territorial communication channel is associated with significant signal distortions.

City networks (or networks of metropolitan areas) -Metropolitan area Networks (MAN) - are a less common type of networks. These networks appeared relatively recently. They are designed to serve the territory of a large city - a metropolis. While LANs are best suited for short-distance resource sharing and broadcasts, and WANs for long distances but with limited speed and poor service coverage, metropolitan networks fall somewhere in between. They use digital backbones, often fiber-optic, with speeds of 45 Mbps or more, and are designed to connect city-wide local networks and connect local networks to global networks. These networks were originally designed for data transmission, but they also support services such as videoconferencing and integrated voice and text. The development of metropolitan area network technology was carried out by local telephone companies.

Local Area Networks

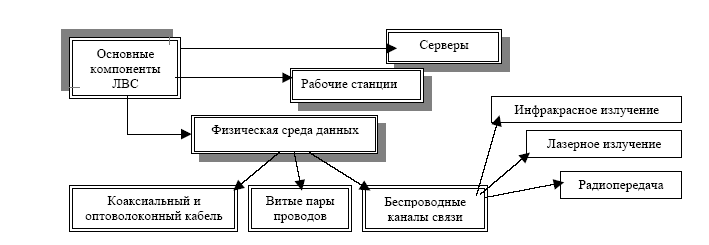

Local area networks (LANs) are now widely used due to their low complexity and low cost. They are used in the automation of commercial, banking activities, as well as to create distributed, control and information systems. LANs have a modular organization (Figure 7):

Their main components are

Servers are hardware and software systems that perform the functions of managing the distribution of network resources for public access,

workstations are computers that access network resources provided by the server,

the physical transmission medium (network cable) is coaxial and fiber optic cables, twisted pairs wires, as well as wireless communication channels (infrared radiation, lasers, radio transmission).

There are two main types of local area networks: peer-to-peer and server-based. The differences between them are of fundamental importance, since they determine the different capabilities of these networks.

peer-to-peer networks. In these networks, all computers are equal: there is no hierarchy among them; no dedicated server. As a rule, each PC functions both as a workstation (PC) and as a server, that is, there is no PC responsible for administering the entire network. All users decide for themselves what data and resources on their computer to make publicly available over the network.

A working group is a small team united by a common goal and interests. Therefore, in peer-to-peer networks, most often no more than 10 computers. These networks are relatively simple, since each PC is both a PC and a server. There is no need for a powerful central server or other components required for more complex networks.

Support for peer-to-peer networks is built into such operating systems as MS Widows NT for Workstation, MS Widows 95/98, Widows 2000. Therefore, in order to establish a peer-to-peer network, an additional software is not required, and a simple cabling system is used to connect computers.

Although peer-to-peer networks are quite suitable for the needs of small firms, there are situations where their use is inappropriate. In these networks, protection involves setting a password on a shared resource (eg, a directory). It is very difficult to centrally manage protection in a peer-to-peer network because:

the user installs it himself;

"shared" resources can be on all PCs, not just on a central server.

This situation is a threat to the entire network; in addition, some users may not install protection at all. If confidentiality issues are fundamental for the company, then such networks are not recommended. In addition, since in these LANs each PC functions as both a PC and a server, users must have sufficient knowledge to operate both as users and as administrators of their computer.

Server based networks. If more than 10 users are connected, the peer-to-peer network may not perform well. Therefore, most networks use dedicated servers. Dedicated these are servers that function only as a server (excluding the functions of a PC or a client). They are specially optimized for fast processing

requests from network clients and to manage the protection of files and directories.

The range of tasks that servers perform is diverse and complex. To accommodate the increasing needs of users, LAN servers have become specialized. For example, in the Windows NT Server operating system, there are various types of servers:

File servers and print servers. They control user access to files and printers.

Application servers (including database server, Web server). Application parts of client-server applications (programs) are executed on them.

Mail servers - manage the transmission of electronic messages between network users.

Fax servers - manage the flow of incoming and outgoing fax messages through one or more fax modems.

Media servers - manage the flow of data and mail messages between this LAN and other networks, or remote users via modem and telephone line. They also provide access to the Internet.

Directory Services Server - designed to search, store and protect information on the network. Windows NT Server combines PCs into logical groups-domains, the security system of which gives users different access rights to any network resource.

Departmental, campus, corporate networks

Another popular way to classify networks is to classify them by the size of the business unit within which the network operates. A distinction is made between department networks, campus networks, and corporate networks.

Department networks- These are networks that are used by a relatively small group of employees working in the same department of the enterprise. These employees perform some common tasks, such as accounting or marketing. It is believed that the department may have up to 100-150 employees.

The main purpose of the departmental network is to share local resources such as applications, data, laser printers, and modems. Typically, departmental networks have one or two file servers and no more than thirty users. Departmental networks are usually not subnetted. Most of the enterprise's traffic is localized on these networks. Departmental networks are usually created on the basis of any one network technology - Ethernet, Token Ring

The tasks of managing a departmental network are relatively simple: adding new users, fixing simple failures, installing new nodes, and installing new software versions. Such a network can be managed by a dedicated employee

admin only part of his time. Most often, the department's network administrator has no special training, but is the person in the department who understands computers best of all, and it turns out that he is in charge of network administration.

There is another type of network, close to departmental networks, - workgroup networks. Such networks include very small networks, including up to 10-20 computers. The characteristics of workgroup networks are almost the same as the characteristics of departmental networks described above. Properties such as network simplicity and homogeneity are at their strongest here, while departmental networks can in some cases approach the next largest network type, campus networks.

Campus networks got its name from the English word campus - campus. It was on the territory of university campuses that it often became necessary to combine several small networks into one large network. Now this name is not associated with campuses, but is used to refer to the networks of any enterprises and organizations.

The main features of campus networks are as follows. Networks of this type combine many networks of different departments of one enterprise within a single building or within a single area covering an area of several square kilometers. At the same time, global connections are not used in campus networks. Such network services include communication between departmental networks, access to shared enterprise databases, access to shared fax servers, high-speed modems, and high-speed printers. As a result, employees of each department of the enterprise get access to some files and network resources of other departments. An important service provided by campus networks has become access to corporate databases, regardless of what types of computers they are located on.

It is at the campus network level that the problems of integrating heterogeneous hardware and software arise. Types of computers, network operating systems, network hardware may differ in each department. This is where the complexity of network management comes in.

campuses. In this case, administrators should be more qualified, and the means of operational network management should be more advanced.

Corporate networks also called enterprise networks. Enterprise-wide networks (corporate networks) connect a large number of computers in all areas of a single enterprise. They can be intricately connected and cover a city, a region, or even a continent. The number of users and computers may be in the thousands, and the number of servers may be in the hundreds, and the distances between the networks of individual territories may be such that it becomes necessary to use global links. To connect remote local networks and individual computers in corporate network various telecommunication means are used, including telephone channels, radio channels, satellite communications. The corporate network can be thought of as "LAN islands" floating in the telecommunications environment.

The corporate network will necessarily use various types of computers - from mainframes to personal computers, several types of operating

systems and many different applications. Heterogeneous parts of the corporate network should work as a whole, providing users with transparent access to all necessary resources as far as possible.

When combining separate networks of a large enterprise with branches in different cities and even countries into a single network, many quantitative characteristics of the unified network exceed a certain critical threshold, beyond which a new quality begins.

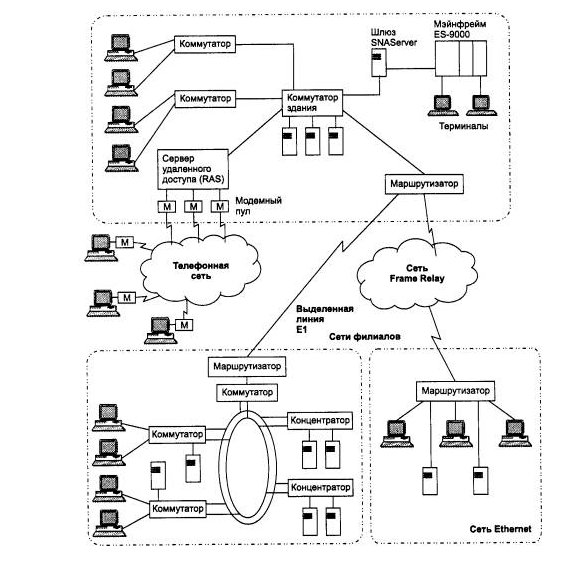

Figure - An example of a corporate network

The simplest way to solve it is to place the credentials of each user in the local credential database of each computer to whose resources the user must have access. When access is attempted, this data is retrieved from the local account database and based on it, access is granted or denied. For a small network of 5-10 computers and about the same number of users, this method works very well. But if the network has several thousand users, each of which needs access to several dozen

servers, then obviously this solution becomes extremely inefficient. The administrator must repeat the operation of entering the user's credentials several dozen times. The user himself is also forced to repeat the logical login procedure every time he needs access to the resources of the new server. A good solution to this problem for a large network is the use of a centralized help desk, in the database of which are stored Accounts all network users. The administrator once performs the operation of entering user data into this database, and the user once performs the logical login procedure, and not to a separate server, but to the entire network.

As you move from a simpler type of network to a more complex one - from departmental networks to corporate networks - the network must be increasingly reliable and fault-tolerant, while the requirements for its performance also increase significantly. As the network grows, so does its functionality. An increasing amount of data circulates on the network, and the network must ensure that it is secure and secure as well as available. Interoperability connections should be more transparent. With each transition to the next level of complexity, the computer equipment of the network becomes more diverse, and geographical distances increase, making it more difficult to achieve goals; more problematic and costly is the management of such connections.

Network topologies and media access methods

Network topology characterizes the relationship and spatial arrangement relative to each other of network components - network computers (hosts), workstations, cables and other active and passive devices.

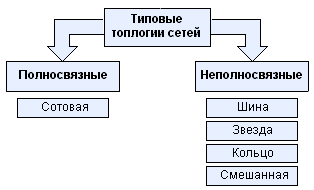

Network topologies can be divided into two main groups: fully connected and non-fully connected.

Topology affects:

composition and characteristics of network equipment;

network expansion options;

network management method.

All networks are built on the basis of three basic topologies:

star (star);

ring.

Media Access Method determines how a shared resource - a network cable - is provided to network nodes for the implementation of data transfer acts. The main methods of access to the data transmission medium:

adversarial method (carrier sense multiple access with collision detection - CSMA/CD);

with the transfer of the token;

by request priority.

Have you ever played online? You like it? Playing with bots is incomparable... Unlike bots, people can come up with and perform such tricks that you involuntarily pull your hat off (remember, for example, “rocket jump”). But in most cases, you have to play by modem, causing dissatisfaction with the household - the phone is busy for hours. Local networks are not yet available everywhere, so sometimes you have to borrow a phone, although a rival in games lives in a neighboring apartment (through the wall) or in the house opposite. Or even in one apartment nearby (or in neighboring rooms) there are computers - “new” and “old”. Are there really no other options besides locales? Yes, and the conditions are different for everyone - not everyone decides to start pulling a coaxial cable or twisted pair cable in the entrance, knowing that irresponsible citizens can simply cut off the wiring.

There are various options for solving this problem. Let's start, as always, with the simplest.

Ah, the wires...

Do you know what a null modem is? This is just a cable for connecting computers through ports. The easiest option is to solder such a cable for the COM port. The maximum length of this cable can reach 15 meters, but with the maximum length of the cable, the transmission speed decreases - the quality of the connecting wires begins to affect (academically speaking, this will already be a “long line with distributed parameters”).

The most “low-budget” version of this connector should have only 3 wires. One of them is “general”, the other two are signal. It is desirable to use shielded signal wires. So it turns out two channels - one for reception, the other for transmission. Jumpers on the connector pins are installed in order to signal the presence of a device ready for data exchange. In this case, everything works like this: if the device that receives the data can no longer receive it, it sends the XOFF (13h) byte character on the reverse channel. The opposite device, having received this character, stops the transmission. When the receiving device is ready to receive data again, it sends the XON (11h) byte character, upon receipt of which the opposite device resumes data transmission. This method of exchange bears the tricky name “XON/XOFF Flow Control Software Protocol”. Its advantage is a minimum of wires, because there are no control signals. Disadvantages - the requirement to have a buffer for the received data and an increased response time (due to waiting for the XON signal to pass). That's why overall performance in this case, not the highest. This is just one type of "Direct Cable Connection" - in WINDOWS it is located in the menu "Programs - Accessories - Communication - Direct Cable Connection". If you do not have it there, put it - maybe it will come in handy ...

Note: if you are using a null modem cable, you MUST connect the computer cases with a wire thick enough to equalize the potentials of the cases - otherwise the equalizing current will flow through the serial port chips, which they will not like. Before connecting any devices to the ports, you must turn off the power of both of them - both the computer and the device.

Well, if the computers are nearby, or, in extreme cases, through the wall in neighboring rooms (or apartments). And if your friend's house is opposite, 50-100 meters away? And he doesn’t have a phone in his apartment yet (for example, it’s happening in the area of new buildings). Then you have to tighten up, attract resources and imagination ...

"Bluetooth"? No, Red Eye!

Recently, "Chinese" laser pointers have been sold in our markets in unmeasured quantities at quite reasonable prices. Naturally, craftsmen in different countries (and not only here) began to use them in a variety of schemes - from alarms to intercoms. And, of course, a device was invented for connecting computers at a distance of about 200 meters (of course, with direct visibility). And here is his diagram:

It is given specifically in the “original” form, in which it was published in the magazine “Practicka elektronica A Radio”, No. 3/2001, author - Jack Katz. Here's how it all works:

The laser beam hits the phototransistor (the maximum of its spectral sensitivity is in the range of 550...1050 nm), then through two series-connected Schmitt triggers it enters the microcircuit input. Schmitt triggers are needed in order to increase the slope of the signal edges. (Don't laugh, the term "slope" in radio engineering is real. For example, as you like the expression: "He was as steep as the edge of a square wave ...") The MAX232A chip converts TTL / CMOS signals to signals that the serial port understands computer - with levels of approximately + -10 Volts (well, and vice versa, of course). This scheme uses one channel for receiving and one channel for transmitting (in fact, the microcircuit allows you to organize an exchange with two devices, therefore it has two independent channels for receiving and two for transmitting). The microcircuit contains a built-in voltage converter, so one +5 Volt power supply is enough for it. For the converter to work, external capacitors are needed, their capacitance can be in the range from 0.1 to 1 μF. From the output of the microcircuit, the signal goes to the cable going to the computer's COM port. The jumpers on the connector are soldered in exactly the same way as the previous version.

The signals from the computer are fed to the input of the microcircuit, and after the conversion they go to other Schmitt triggers, which do the same as the previous ones. Then the signal comes to six powerful inverters connected in parallel - this allows you to provide a current of about 35 mA, which is required by the pointer (the wavelength of the emitted light is about 670 nm). The diodes connected in series with the pointer “quench” the excess voltage (about 0.7 - 1 Volt drops on each of them). The supply voltage of +5 Volts is stabilized by a 7805 microcircuit. The exchange rate was promised at about 200 kbps (strange, the port speed is usually 115200, did they “overclock” it, or what?).

This circuit is quite simple to manufacture, there should not be any difficulties with tuning. The computer is simply obliged to perceive it in the same way as the previous cable - in the form of a “Direct cable connection”.

harsh reality

Naturally, there was a desire to collect such a thing and test it on volunteers. Volunteers were found quickly, but there were problems with the details. Instead of MAX232A, they sent P233MMX (I hope the difference is clear? ..) The required phototransistor was also not available. So I had to invent a replacement from the available parts. There are a lot of details, but they are needed in order to ensure that the signals comply with the RS232 standard. It all looks, of course, scary, but it works quite satisfactorily. (However, while a replacement was being searched and developed, the volunteers disappeared somewhere ...) The elements of this circuit can be used in other devices. The scheme works like this:

The signals from the serial port of the computer are fed to the input of a key circuit assembled on transistors VT1, VT2, VT3 and a trigger on logic elements DD1.2 and DD2.2. If the polarity of the signal is positive, then the input voltage through the diodes VD1, VD2 opens the transistor VT1. At the input of the trigger, a low voltage level is obtained, and “1” is set at its output. If the voltage at the input of the circuit is negative, the transistor VT2 opens, then VT3. The voltage at pin 5 changes from high to low, the RS flip-flop is flipped to another state. Schmitt triggers connected in series are connected to the trigger output. Next comes six powerful inverters connected in parallel, to the outputs of which a laser pointer is connected.

Such a sophisticated circuit is needed in order for the transmitter to respond only to signals that are in the range from -10 ... -3 to + 3 ... + 10 Volts. Moreover, while the signal fluctuates between -3 ... +3 Volts, the output signal does not change. This interval is called the “dead zone”.

The light beam from the opposite transmitter hits the lens of the photodiode connected to the base of the transistor VT4. Next - again Schmitt triggers, then - the input of the A1 microcircuit. A “reference” voltage is applied to the other input of the microcircuit, with which the input signal is compared. The reference voltage is obtained due to the voltage drop across the direct resistance of the diodes VD7, VD8. To the exit operational amplifier transistors VT5 and VT6 are connected. This use case of an operational amplifier is called a "comparator" - a comparison device. Depending on whether the voltage at the output of the op-amp is positive or negative, the transistor VT5 and VT6 opens, respectively. Through a current limiting resistor in case of a short circuit in the line, the signal is applied to the output connector.

Switches S1 and S2 are required when setting up the circuit. For example, using switch S1, it is necessary to ensure that the laser glows in the “initial” state (or when configured without connecting to a computer). When switching S2, the polarity of the signal at the output of the circuit (at the COM port connector) should jump from positive to negative. (In most cases, immediately after a reboot, the polarity of the TD signal is precisely negative, although this may depend on the specific motherboard and BIOS version. This is why switch S2 is used - in case you need to reverse the signal polarity.)

Advice

To power this “optical modem”, you can use simple blocks power supplies, for example, “Chinese”, choosing them with a maximum current of about 500 mA. The voltage can be stabilized with a microcircuit

K142EN5B (at 5 volts). For the first option, this will be enough. With the second option it is more difficult - after all, there is bipolar power. But the problem is solved very simply - just buy a second power supply (preferably similar to the first one) and connect everything according to the scheme:

The voltage switches in the power supplies are not shown in the diagram. With their help, it is necessary to set the voltage to 10 volts under load. LEDs can be used from unusable "mice". If you plan only short-term sessions, try using LEDs in plastic cases, but if you plan to install this system “seriously and for a long time”, it is better to look for LEDs with glass lenses (for example, something like FD-256, often used in receivers of remote control systems). TV controls). Receiving LEDs must be placed in a tube blackened inside with a diameter of about 1 cm and a length of 4-5 cm to exclude side illumination. Power is supplied to the laser pointer as follows: “+” to the body of the pointer, “-” to the internal contact.

ATTENTION! When working with a laser pointer, be careful - do not direct its beam into the eyes of people or animals, you can seriously damage your eyesight!

It is best to mount the laser pointer and the tube with the LED together (“coaxially”) on something like a tripod for the camera (to be able to tilt and turn in any direction). Otherwise, you will have to combine something from the wire and be prepared for the fact that at the most unexpected moment the setting will be knocked down by the wind. Pointing blocks at each other is best done early in the morning or in the evening, when the sunlight is not so bright. At the same time, it’s good if you have binoculars at hand, at least “theatrical”.

When installing the laser-photodiode unit in the right place (preferably on a loggia or balcony), avoid getting the laser beam into other people's windows - very nervous people can live there, and disgruntled comrades in bulletproof vests or taciturn gentlemen in leather jackets can unexpectedly come to visit you (depending on who these nervous people will call ...).

It is better to assemble the receiving-transmitting unit in a separate small case. To reduce the number of connecting wires, you can connect it to the main circuit like this:

Two shielded cables are used, preferably short. The insulation on the cables must be strong enough - after all, +5 Volt supply voltage is supplied through the braid of one cable, and the “case” is supplied through the braid of the other. The center conductors go to the pins of the microcircuits. Naturally, the cable braids in this case must be well isolated from each other.

Most likely, it will be difficult to get connectors for the COM port. You can get out of the situation like this: you have to find four faulty mice with the right connectors. The connectors are carefully cut, the insert with the contacts is removed (as a rule, it is embedded in plastic - this will be visible as soon as you start the “opening”). There will be only four contacts, the rest will have to be installed by ourselves. To do this, holes are carefully drilled in the right places with a round file on the side of the wires, into which the missing contacts are tightly inserted. Pin #9 is not needed in this circuit. At first, you can practice - you still need only two out of four connectors.

If the brightness of the “pointer” glow is insufficient, it is enough to remove one of the series-connected diodes.

The operational amplifier can be replaced with another one, as long as it is classified as “high-speed” and can withstand a voltage at the inputs of at least 3 volts. If its performance is insufficient, you will not get the maximum data transfer rate.

The diodes in the circuit are any pulse diodes that can withstand a forward current of at least 50 mA. If during operation they noticeably heat up, you will have to replace them with more powerful ones.

It remains to be hoped that there will be no particular difficulties in the manufacture and installation of this device, and the pleasure of using it will compensate for all the experiences. It's great if you can get MAX232A - because then everything will be quite simple. The data transfer rate will be, perhaps, low - in comparison with the "proprietary" optical and radio modems. But the cost of parts should be the greatest.

Via network card

Connecting two or more computers over twisted pair is the cheapest, high-quality and high-speed connection method.

This connection requires a network card. Almost all modern motherboards have a built-in network card. And you can find out if there is one by looking at the back panel of the connectors above the USB ports there should be a small connector similar to a telephone jack, only larger in size (to make sure this connector, just insert a telephone cable there, but the network will not work with it ). Also, it would not hurt to read the documentation for the motherboard. If there is no built-in network card, then you can buy one that is inserted into the PCI slot.

The speed of such a connection can reach up to 1Gbps. The speed depends on the network card. When connecting more than two computers, you need to purchase a switch. An unlimited number of computers can be connected to such a network.

Not only games

And now let's move on to the practical application of the above compounds. Here is a typical example of a “question from the people” and an answer from the Internet (many thanks to WINDOWS “ripper” Sergey Troshin!):

We have two computers, but one modem. Also it would be desirable in the Internet from both computers. Is it possible to do without installing network cards?

It's pretty simple. We take a cable to connect two computers via parallel ports (in this case, for COM ports), a Dial-Up adapter is installed on both. All this will be managed by WinRoute home 3.04 (or 4.0, 4.1…)

The “cable” connection is already built into Windows95/98. It is used by Parallel sharing. All that is needed in the first step is to install a dial-up adapter on each computer. Check that both computers with W95/98 have the DUN1.3 (dial-up networking upgrade) upgrade - this upgrade is on the Microsoft website, just go to their “search engine” and search for exactly “DUN 1.3” - the result will be a lot of necessary links. Win95 OSR2 or Win98 already has everything you need, and Windows NT doesn't support parallel port connections (but serial?).

Also, make sure that DCC (Direct Cable Connection) is installed on both computers. Check that each computer's network neighbors contain a dial-up-adapter (the host machine must have two dial-up adapters installed). Install WinRoute Home on the host computer. Make sure that the TCP/IP protocol is installed and bound on the second computer as follows: TCP/IP->Dial up adapter. In the settings, you only need the DNS field - enter the provider's DNS server or host machine address there. On the host machine, set up the TCP/IP adapter (ie modem) as usual - all settings as required by the ISP. The settings of the dial-up adapter that is associated with the DCC can be left alone. Start WinRoute on the host machine, start DCC. Do not set the "Connection via proxy" option in browsers. The connection speed to the host machine via DCC is up to 4 Mbps. (Not for the COM port, of course...) The list of games that support a direct connection, those who wish can search for themselves.

By the way, have you read the book “The Hyperboloid of Engineer Garin”?..

There is three main ways to organize computer-to-computer communication:

- connection of two adjacent computers through their communication ports using a special cable;

- transferring data from one computer to another modem using wired, wireless or satellite communication lines;

- integration of computers computer network

Often when establishing a connection between two computers for one computer is assigned the resource provider role(programs, data, etc.), and after another - the role of the user of these resources. In this case, the first computer is called server , and second - client or workstation. You can only work on a client computer running special software.

Server (English) serve- service) is a high-performance computer with a large volume external memory, which provides service other computers by managing the allocation of expensive shared resources (programs, data, and peripherals).

To overcome interface incompatibilities individual computers develop special standards called communication protocols.

Communication protocols prescribe to divide the entire amount of transmitted data into packages - separate blocks of a fixed size. Packets are numbered so that they can then be assembled in the correct sequence. Additional information is added to the data contained in the packet, approximately in the following format:

Check sum The packet data contains the information needed for error control. The first time it is calculated by the transmitting computer. After the packet is transmitted, the checksum is recalculated by the receiving computer. If the values do not match, it means that the package data was damaged in transit . Such a packet is discarded and a request is automatically sent retransmit the packet.

A computer network is a collection nodes (computers, workstations, etc.) and connecting them branches .

Network branch - is a path connecting two adjacent nodes.

Network nodes are of three types:

- terminal node - located at the end of only one branch;

- intermediate node - located at the ends of more than one branch;

- adjacent node - such nodes are connected by at least one path that does not contain any other nodes.

Computers can be networked different ways.

The most common types of network topologies are:

Contains only two end nodes, any number of intermediate nodes, and has only one path between any two nodes.

A network in which each node has two and only two branches attached to it.

A network that contains more than two end nodes and at least two intermediate nodes, and in which there is only one path between two nodes.

![]()

A network that has only one intermediate node.

A network that contains at least two nodes that have two or more paths between them.

Fully connected network. A network that has a branch between any two nodes. The most important characteristic computer network - its architecture.

The most common architectures:

- ethernet(English) ether- ether) - a broadcast network. This means that all stations on the network can receive all messages. Topology - linear or star. Data transfer rate 10 or 100 Mbps.

- Arcnet (Attached Resource Computer Network- a computer network of connected resources) - a broadcast network. Physical topology - tree. Data transfer rate 2.5 Mbps.

- token ring(relay ring network, token passing network) - a ring network in which the principle of data transmission is based on the fact that each node of the ring waits for the arrival of some short unique bit sequence - marker- from the adjacent previous node. The receipt of the token indicates that the message can be passed from this node further downstream. Data transfer rate 4 or 16 Mbps.

- FDDI (Fiber Distributed Data Interface) - network architecture of high-speed data transmission over fiber optic lines. Transfer rate - 100 Mbps. Topology - double ring or mixed (including star or tree subnets). Maximum amount stations in the network - 1000. Very high cost of equipment.

- ATM (Asynchronous Transfer Mode) - a promising, yet very expensive architecture, provides the transmission of digital data, video information and voice over the same lines. Transfer rate up to 2.5 Gbps. Optical communication lines.

Most residents of modern cities daily transmit or receive any data. It can be computer files, a television picture, a radio broadcast - everything that represents a certain portion of useful information. There are a huge number of technological methods of data transmission. At the same time, in many segments of information solutions, the modernization of the corresponding channels is taking place at an incredibly dynamic pace. Conventional technologies, which, it would seem, may well satisfy human needs, are being replaced by new, more advanced ones. More recently, access to the Web through cellular telephone was considered almost exotic, but today this option is familiar to most people. Modern file transfer speeds over the Internet, measured in hundreds of megabits per second, seemed like something fantastic to the first users of the World Wide Web. Through what types of infrastructures can data be transferred? What could be the reason for choosing one or another channel?

Basic Data Transfer Mechanisms

The concept of data transmission can be associated with various technological phenomena. In general, it is associated with the computer communications industry. Data transfer in this aspect is the exchange of files (sending, receiving), folders and other implementations of machine code.

The term under consideration can also correlate with the non-digital sphere of communications. For example, the transmission of a TV signal, radio, the operation of telephone lines - if we are not talking about modern high-tech tools - can be carried out using analog principles. In this case, data transmission is the transmission of electromagnetic signals through one channel or another.

An intermediate position between two technological implementations of data transmission - digital and analog - can take mobile connection. The fact is that some of the relevant communication technologies belong to the first type - for example, GSM communication, 3G or 4G Internet, others are less computerized and therefore can be considered analog - for example, voice communication in AMPS or NTT standards.

However, the modern trend in the development of communication technologies is such that data transmission channels, no matter what type of information is transmitted through them, are actively "digitized". In large Russian cities, it is difficult to find telephone lines that operate according to analog standards. Technologies like AMPS are gradually losing relevance and being replaced by more advanced ones. TV and radio are becoming digital. Thus, we have the right to consider modern data transmission technologies mainly in a digital context. Although the historical aspect of the involvement of certain decisions, of course, will be very useful to explore.

Modern data transmission systems can be classified into 3 main groups: implemented in computer networks, used in mobile networks, which are the basis for organizing TV and radio broadcasts. Let's consider their specifics in more detail.

Data transmission technologies in computer networks

The main subject of data transfer in computer networks, as we noted above, is a collection of files, folders and other products of machine code implementation (for example, arrays, stacks, etc.). Modern digital communications can operate on the basis of a variety of standards. Among the most common is TCP-IP. Its main principle is to assign a unique IP address to a computer, which can be used as the main reference point in data transfer.

File exchange in modern digital networks can be carried out using wired technologies or those that do not involve the use of a cable. The classification of the corresponding infrastructures of the first type can be carried out on the basis of a specific type of wire. In modern computer networks, the most commonly used are:

twisted pairs;

fiber optic wires;

coaxial cables;

USB cables;

Telephone wires.

Each of the noted types of cables has both advantages and disadvantages. For example, twisted pair is a cheap, versatile and easy-to-install type of wire, but it is significantly inferior to fiber in terms of bandwidth (we will consider this parameter in more detail a little later). USB cables are the least suitable for data transfer within computer networks, but are compatible with almost any modern computer- it is extremely rare to find a PC that is not equipped with USB ports. Coaxial cables are sufficiently protected from interference and allow data transmission over very long distances.

Characteristics of computer data networks

It will be useful to study some of the key characteristics of computer networks in which files are exchanged. In list the most important parameters relevant infrastructure - throughput. This characteristic allows you to evaluate what the maximum indicators of the speed and amount of data transmitted in the network can be. Actually, both of these parameters are also key. The data transfer rate is an actual measure of how much files can be transferred from one computer to another in a given amount of time. The parameter under consideration is most often expressed in bits per second (in practice, as a rule, in kilo-, mega-, gigabits, in powerful networks - in terabits).

Classification of computer data transmission channels

Data exchange when using a computer infrastructure can be carried out through three main types of channels: duplex, simplex, and half-duplex. The channel of the first type assumes that the device for transmitting data to the PC can also be a receiver at the same time. Simplex devices, in turn, are only capable of receiving signals. Half-duplex devices provide the use of the function of receiving and transmitting files in turn.

Wireless data transmission in computer networks is carried out most often through standards:

- "small radius" (Bluetooth, infrared ports);

- "medium radius" - Wi-Fi;

- "long range" - 3G, 4G, WiMAX.

The speed at which files are transferred can vary greatly depending on one or another communication standard, as well as the stability of the connection and its immunity from interference. Wi-Fi is considered one of the optimal solutions for organizing home intracorporate computer networks. If data transmission over long distances is necessary, 3G, 4G, WiMax, or other technologies that compete with them are used. Retain the demand for Bluetooth, to a lesser extent - infrared ports, since their activation practically does not require the user fine tuning devices through which files are exchanged.

The most popular "short range" standards are in the mobile device industry. So, data transfer to android from another similar or compatible OS is often carried out using Bluetooth. However mobile devices they can also be successfully integrated with computer networks, for example, using Wi-Fi.

A computer data transmission network functions through the use of two resources - hardware and the necessary software. Both are necessary for the organization of a full-fledged file exchange between PCs. Data transfer programs can be used in a variety of ways. They can be conditionally classified according to such a criterion as the scope.

There is custom software adapted to the use of web resources - such solutions include browsers. There are programs used as a tool for voice communication, supplemented by the ability to organize video chats - for example, Skype.

There is software that belongs to the system category. Appropriate solutions may be practically not involved by the user, however, their operation may be necessary to ensure the exchange of files. As a rule, such software works at the level of background programs in the structure operating system. These types of software allow you to connect a PC to a network infrastructure. On the basis of such connections, user tools can already be used - browsers, programs for organizing video chats, etc. System solutions are also important for stability network connections between computers.

There is software designed to diagnose connections. So, if one or another data transfer error interferes with a reliable connection between a PC, then it can be calculated using a suitable diagnostic program. The use of various types of software is one of the key criteria for distinguishing between digital and analog technologies. When using a traditional type of data transmission infrastructure, software solutions, as a rule, have incomparably less functionality than when building networks based on digital concepts.

Data transmission technologies in cellular networks

Let us now study how data can be transferred in other large-scale infrastructures − cellular networks. Considering this technological segment, it will be useful to pay attention to the history of the development of relevant solutions. The fact is that the standards by which data is transmitted in cellular networks are developing very dynamically. Some of the solutions discussed above that are used in computer networks remain relevant for many decades. This is especially evident in the example of wired technologies - coaxial cable, twisted pair, fiber optic wires were introduced into the practice of computer communications a very long time ago, but the resource for their use is far from being exhausted. In turn, almost every year new concepts appear in the mobile industry, which can be put into practice with varying degrees of intensity.

So the evolution of technology cellular communication begins with the introduction in the early 80s of the earliest standards such as NMT. It can be noted that its capabilities were not limited to providing voice communications. Data transfer via NMT networks was also possible, but at a very low speed - about 1.2 Kbps.

The next step in technological evolution in the cellular communications market was associated with the introduction of the GSM standard. The data transfer rate when using it was assumed to be much higher than in the case of using NMT - about 9.6 Kbps. Subsequently, the GSM standard was supplemented by HSCSD technology, the activation of which allowed cellular subscribers to transmit data at a speed of 57.6 Kbps.

Later, the GPRS standard appeared, through which it became possible to separate typically “computer” traffic transmitted in cellular channels from voice traffic. The data transfer rate when using GPRS could reach about 171.2 Kbps. The next technological solution implemented mobile operators, became the EDGE standard. It made it possible to provide data transmission at a speed of 326 Kbps.

The development of the Internet required developers of cellular communication technologies to introduce solutions that could become competitive with wired standards - primarily in terms of data transfer speed, as well as connection stability. A significant step forward was the introduction of the UMTS standard to the market. This technology made it possible to provide data exchange between subscribers of a mobile operator at a speed of up to 2 Mbps.

Later, the HSDPA standard appeared, in which the transmission and reception of files could be carried out at speeds up to 14.4 Mbps. Many digital industry experts believe that since the introduction of HSDPA technology, cellular operators have begun to compete directly with cable ISPs.

At the end of 2000, the LTE standard and its competitive analogues appeared, through which subscribers mobile operators were able to exchange files at a speed of several hundred megabits. It can be noted that such resources are not always available even for users of modern wired channels. Most Russian providers provide their subscribers with a data transmission channel at a speed not exceeding 100 Mbit / s, in practice - most often several times less.

Generations of cellular technology

The NMT standard generally refers to the 1G generation. GPRS and EDGE technologies are often classified as 2G, HSDPA as 3G, and LTE as 4G. It should be noted that each of the noted solutions has competitive analogues. For example, some experts refer WiMAX to those in relation to LTE. Other competitive solutions in relation to LTE in the 4G technology market are 1xEV-DO, IEEE 802.20. There is a point of view according to which the LTE standard is still not quite correct to classify as 4G, since in terms of maximum speed it falls slightly short of the indicator defined for conceptual 4G, which is 1 Gbps. Thus, it is possible that in the near future a new standard will appear on the global cellular communications market, perhaps even more advanced than 4G and capable of providing data transfer at such an impressive speed. In the meantime, among those solutions that are being implemented most dynamically is LTE. Leading Russian operators are actively modernizing their respective infrastructure across the country — providing high-quality data transmission according to the 4G standard is becoming one of the key competitive advantages in the cellular communications market.

TV broadcast technologies